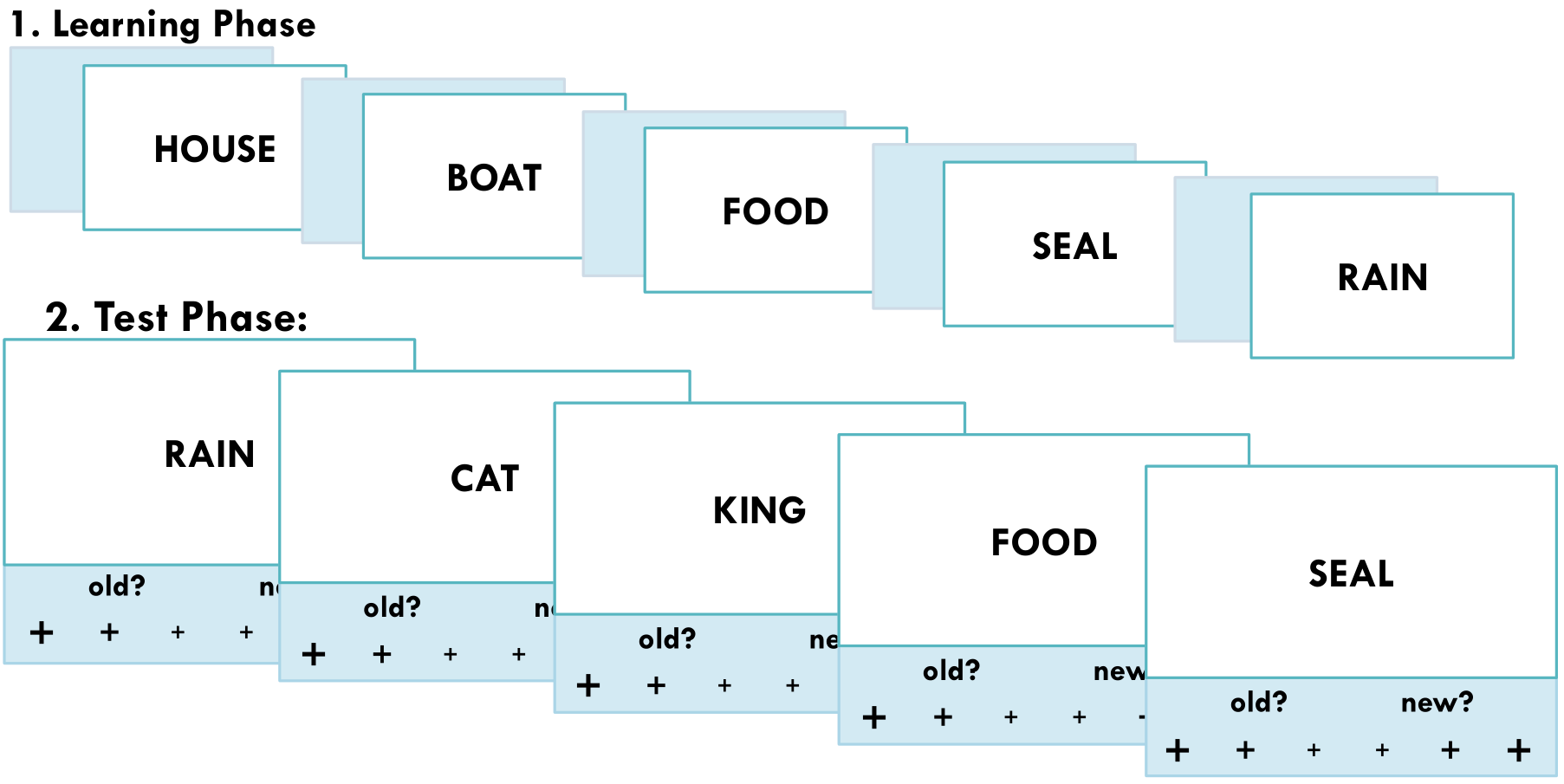

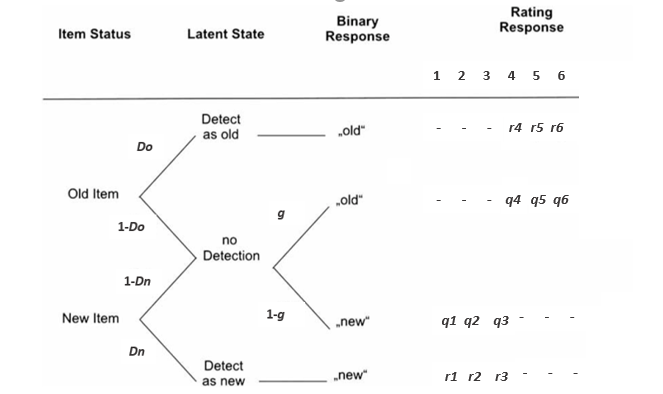

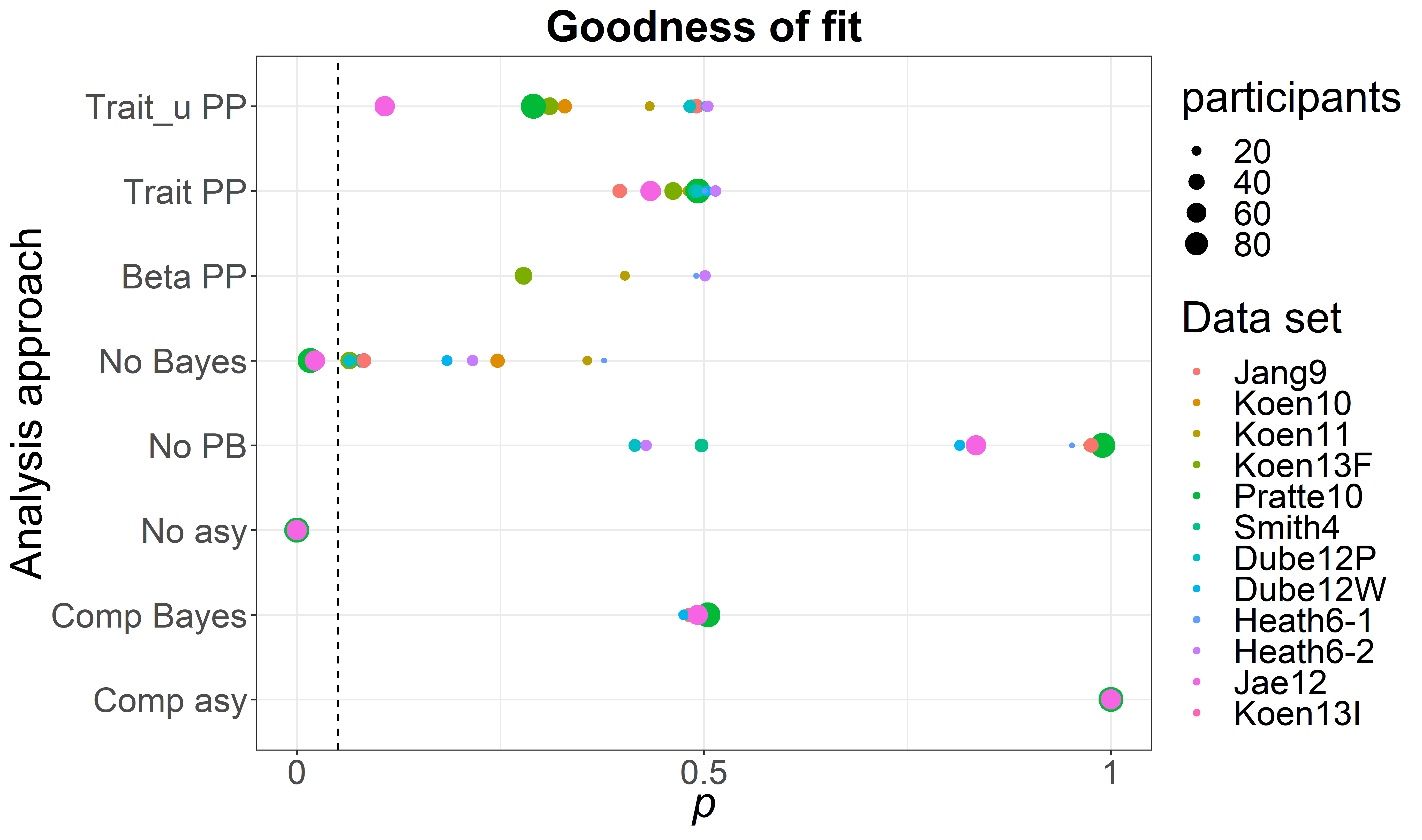

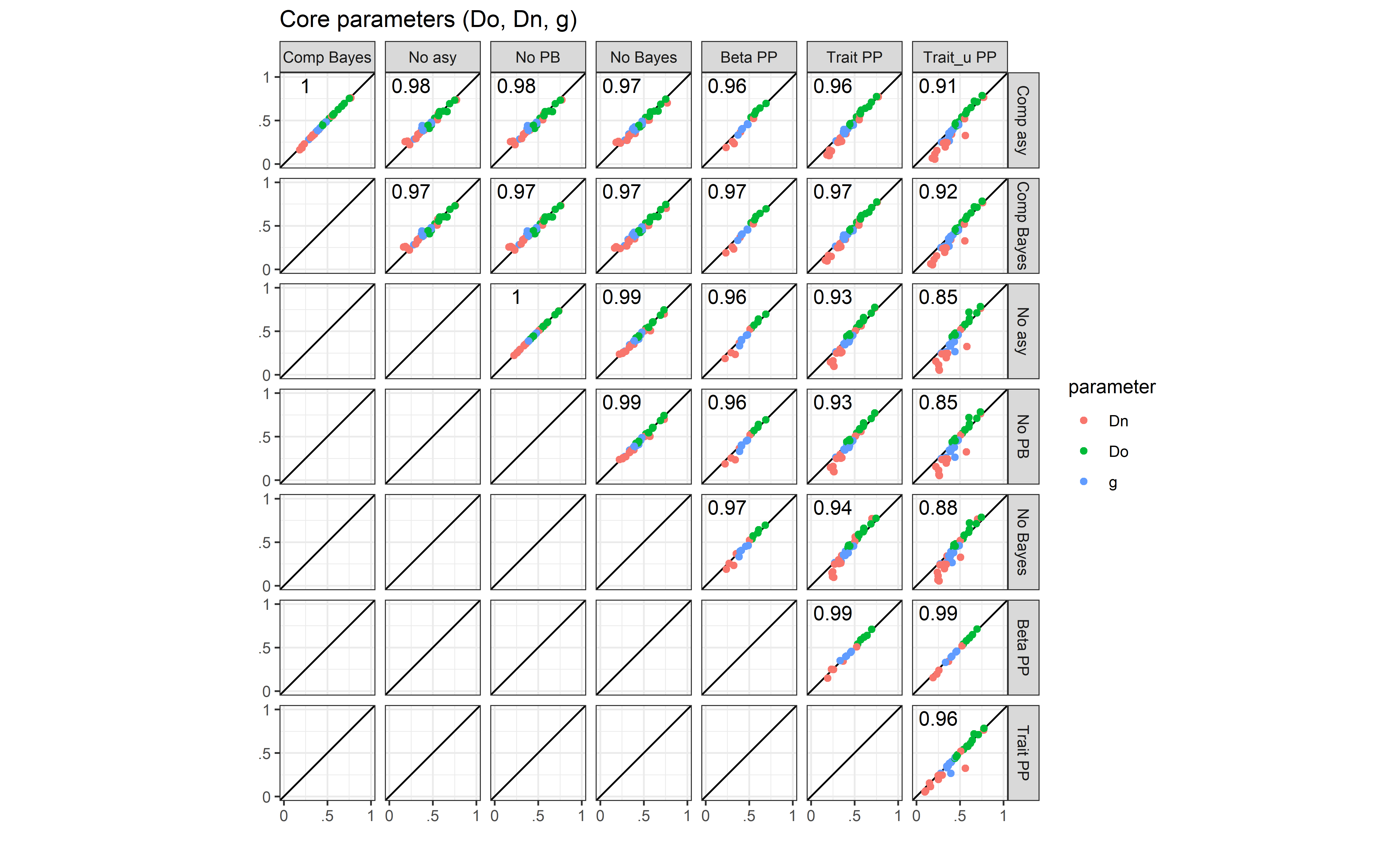

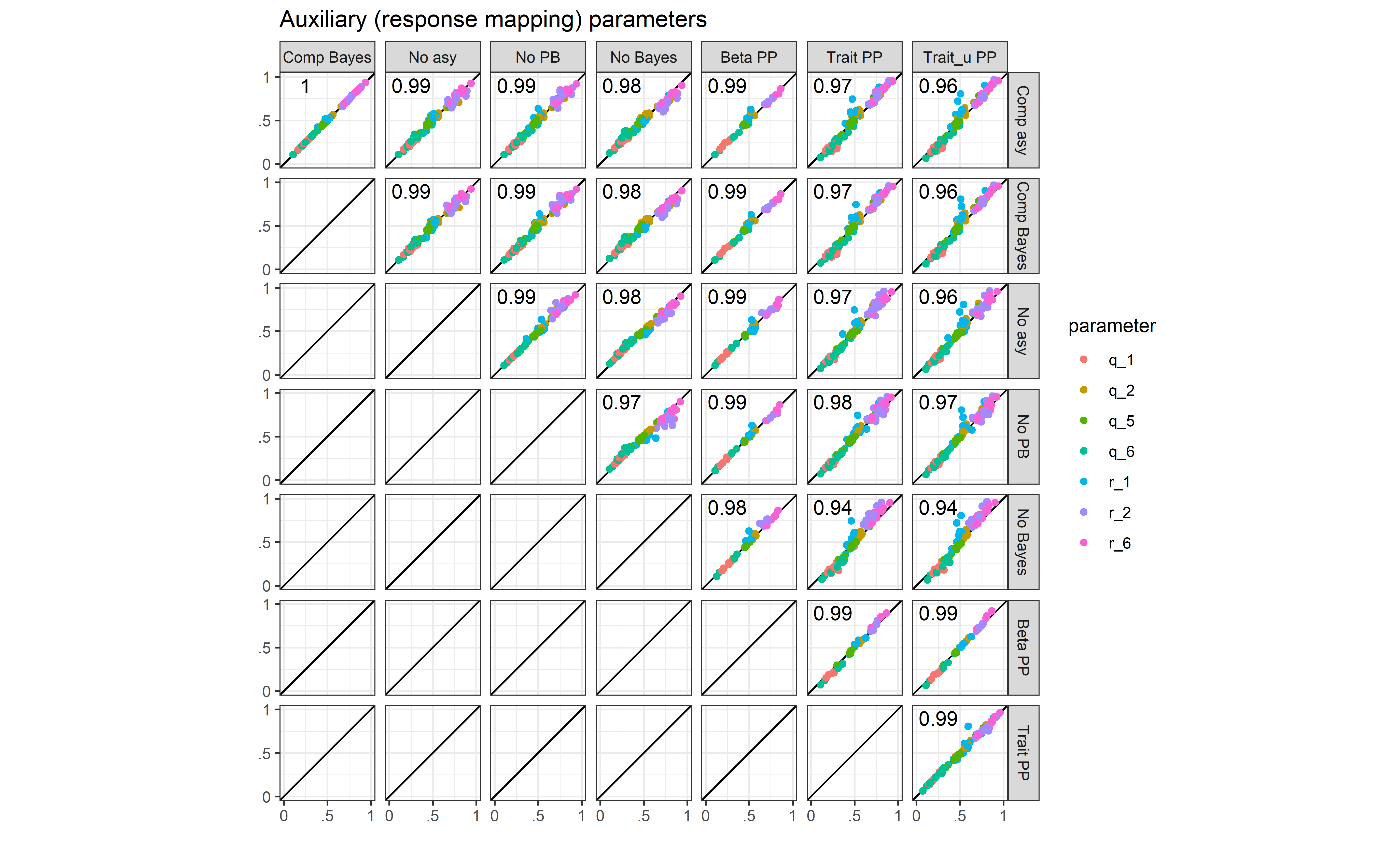

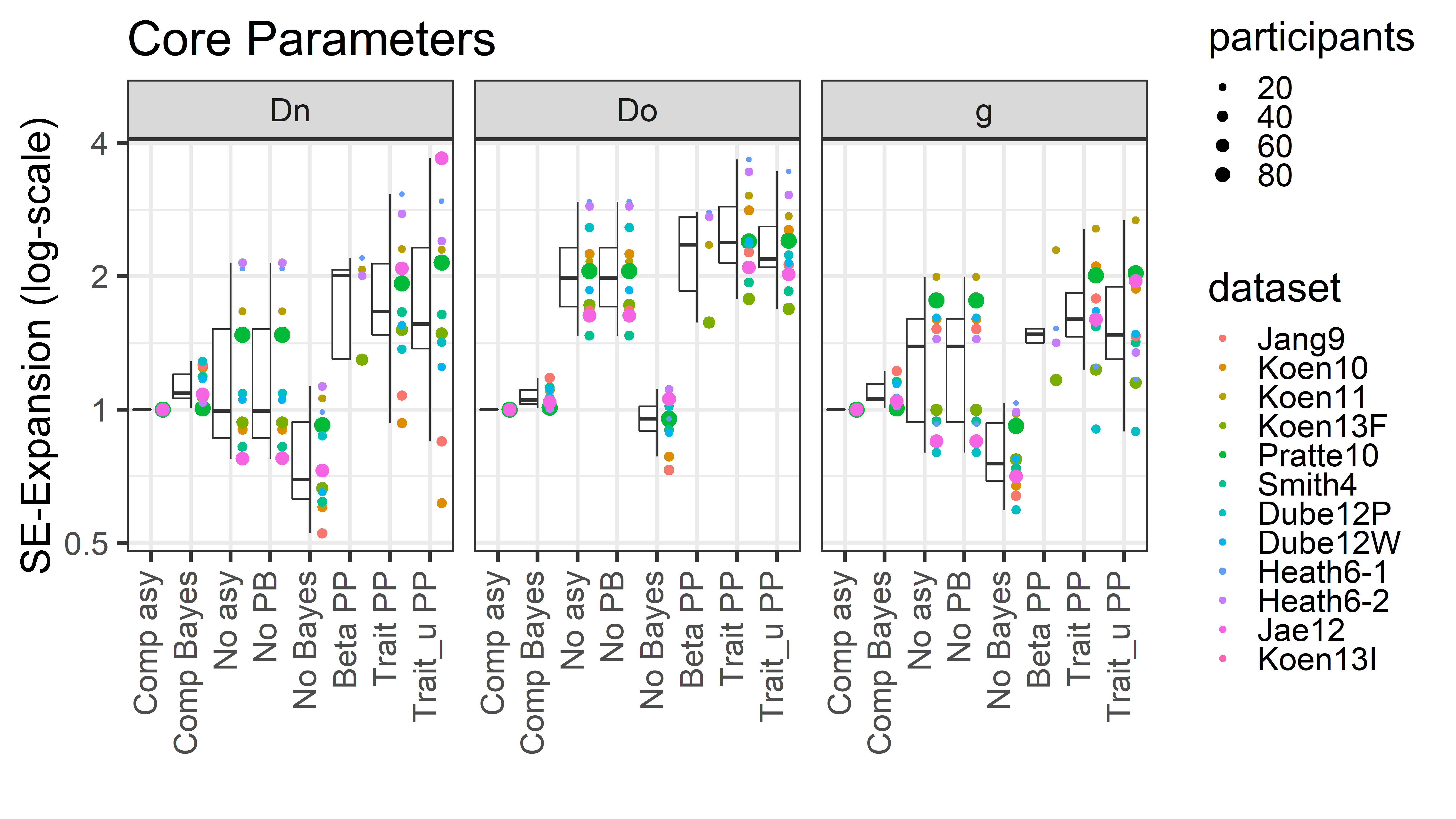

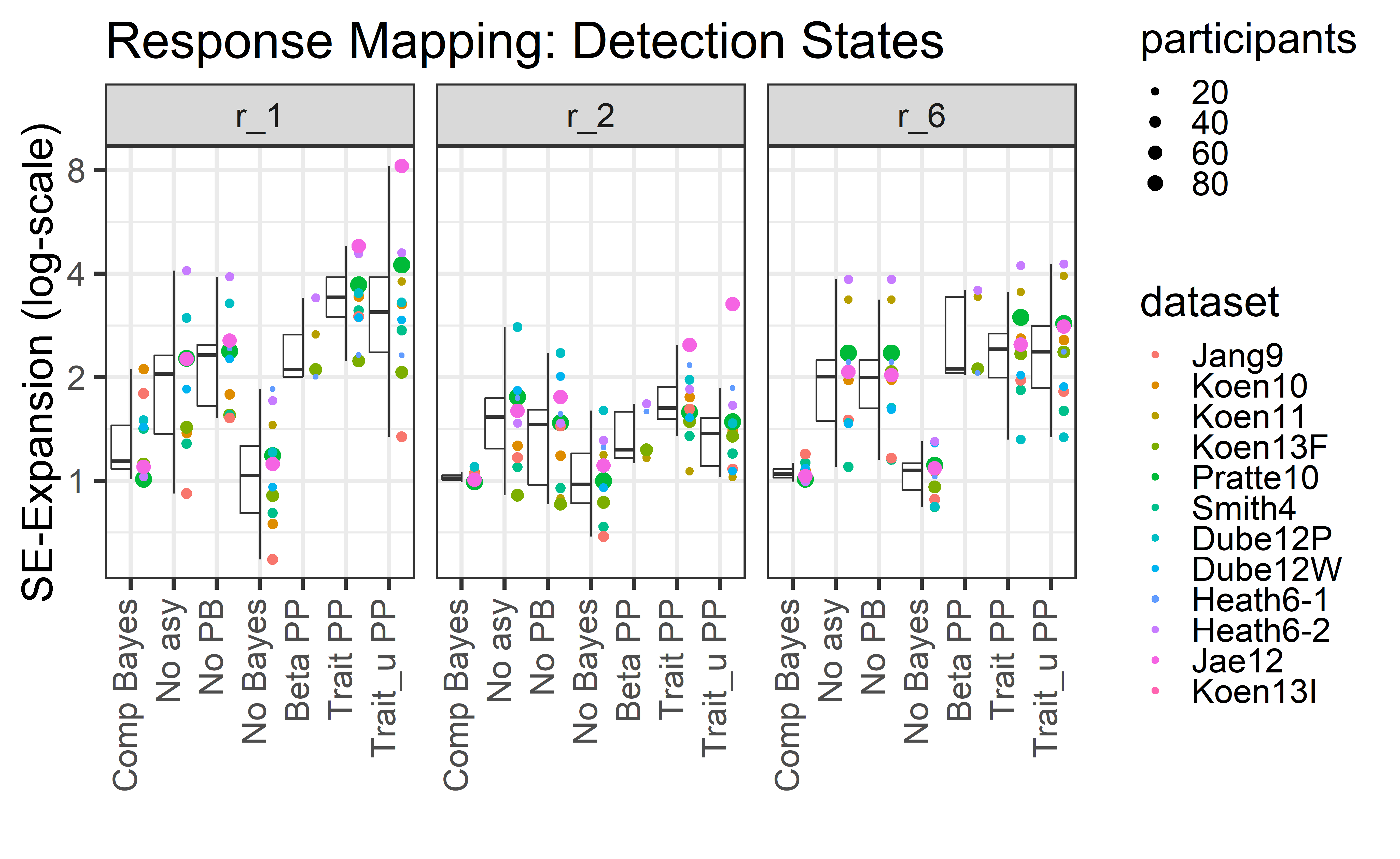

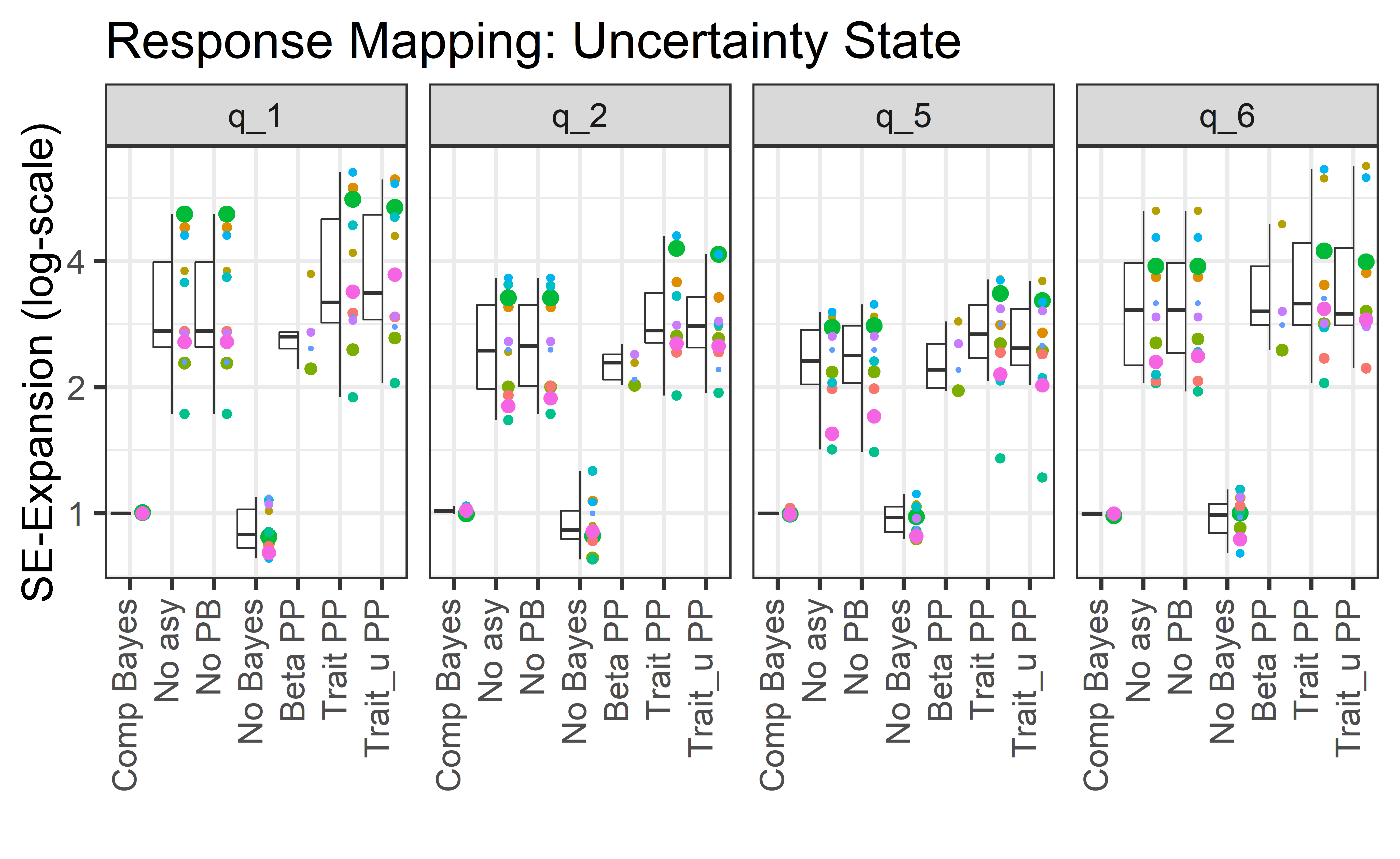

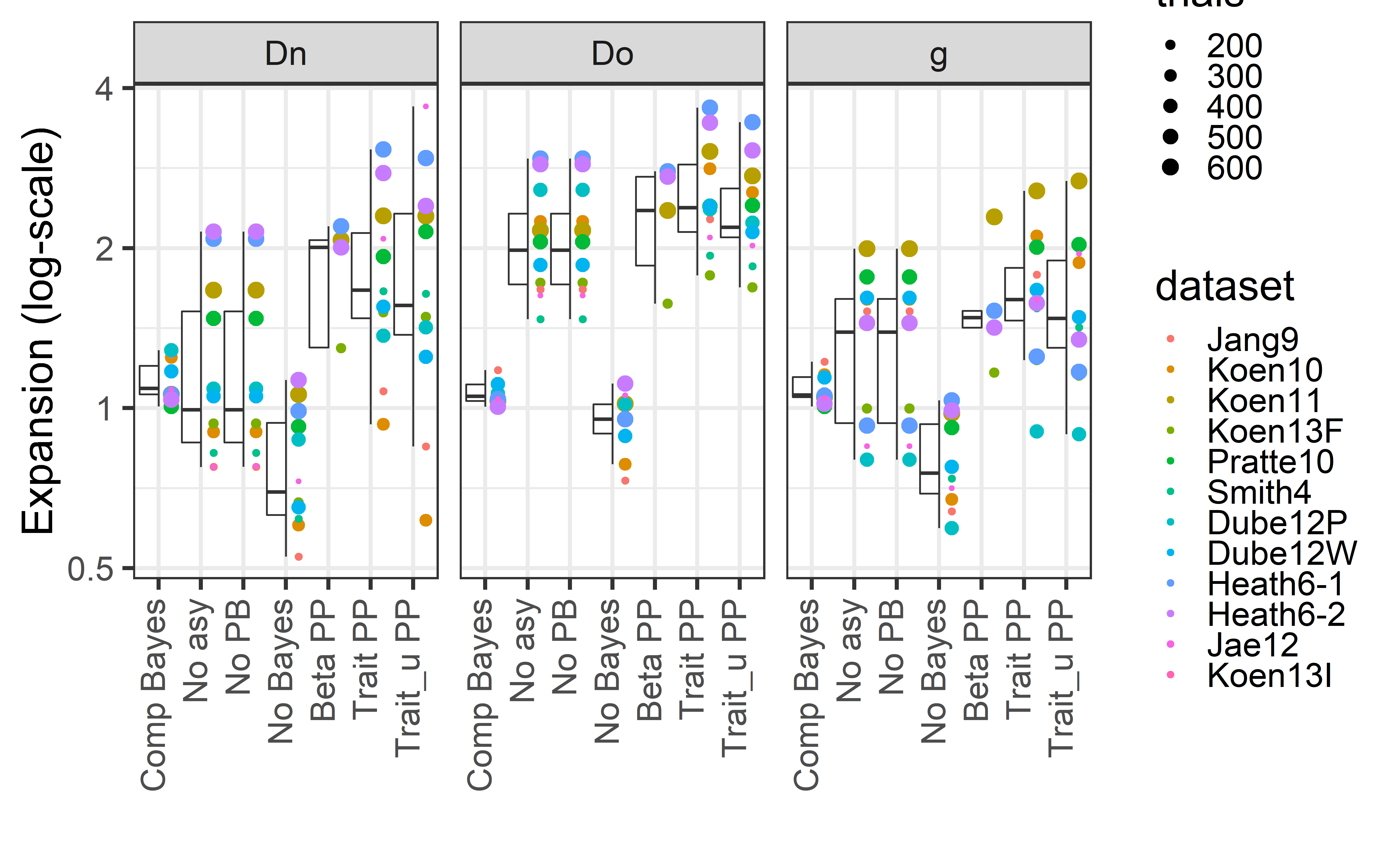

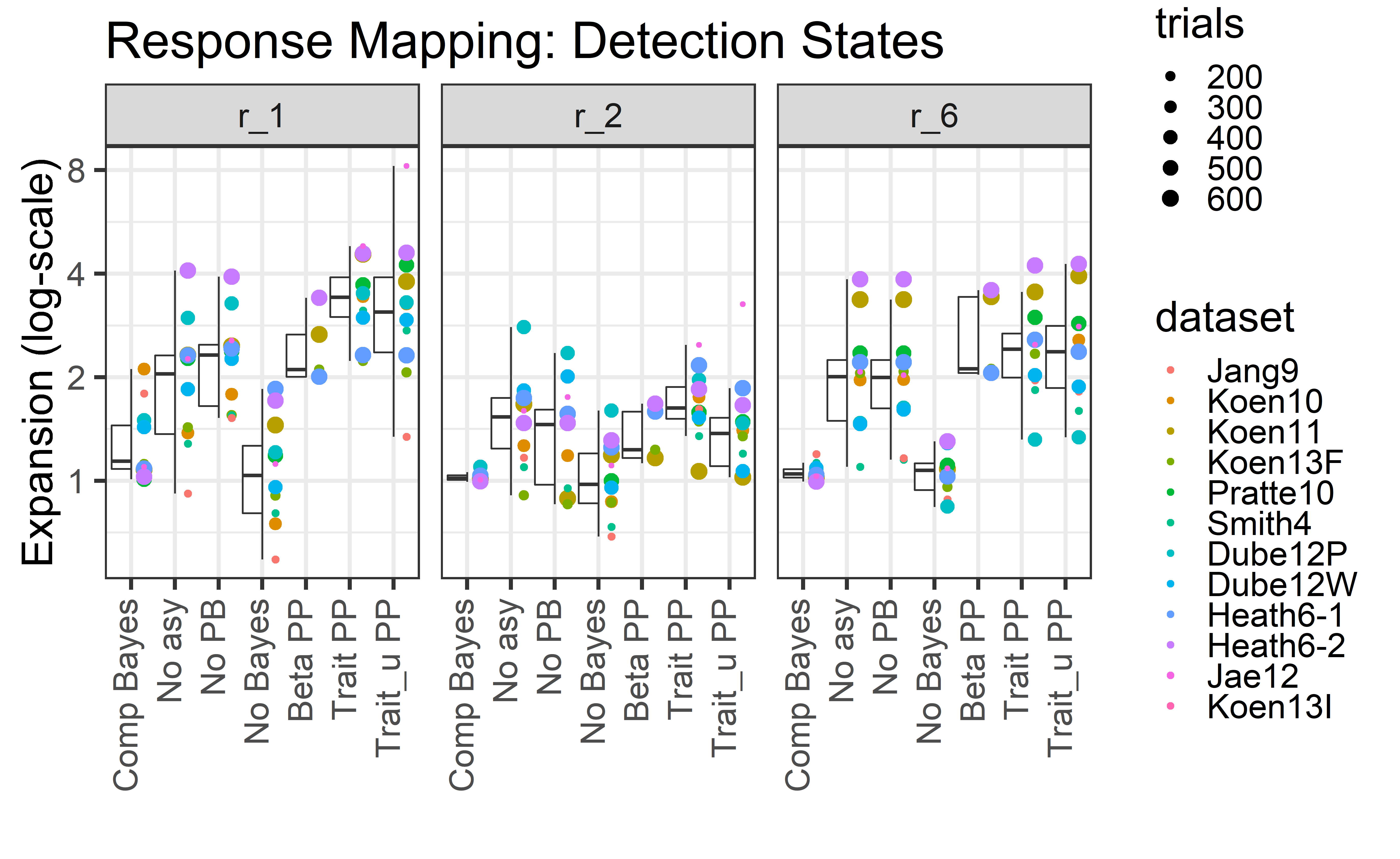

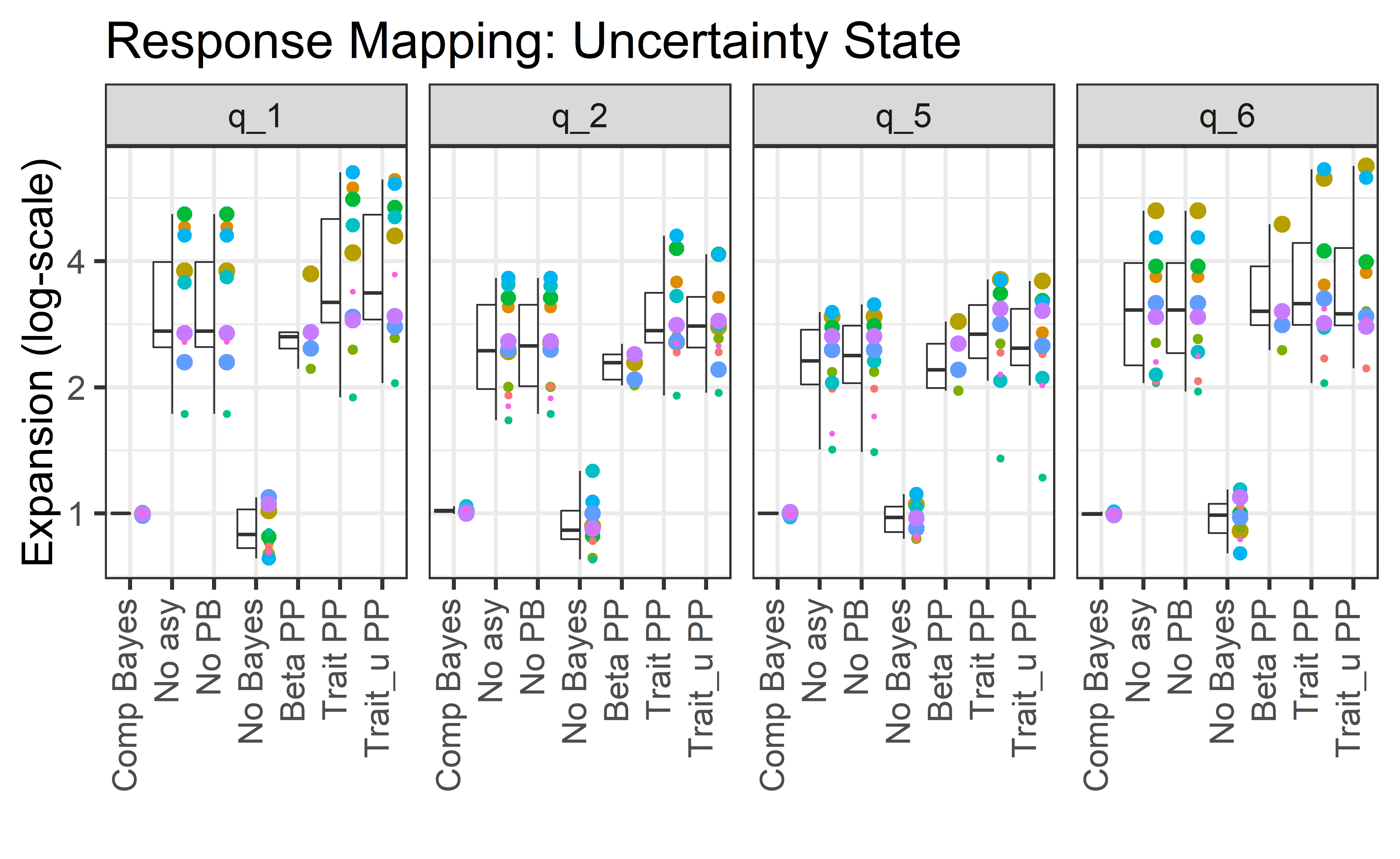

class: center, middle, inverse, title-slide # A Bayesian and Frequentist Multiverse Pipeline for MPT models ## Applications to Recognition Memory ### Henrik Singmann (University of Zurich) <br/>Daniel W. Heck (Universität Mannheim) <br/>Georgia Eleni Kapetaniou (University of Zurich) <br/>Julia Groß (Heinrich-Heine-Universität Düsseldorf) <br/>Beatrice G. Kuhlmann (Universität Mannheim) <br/><a href="https://github.com/mpt-network/MPTmultiverse" class="uri">https://github.com/mpt-network/MPTmultiverse</a> ### July 2018 --- ## Multiverse Approach - Statistical analysis usually requires several (more or less) *arbitrary* decisions between reasonable alternatives: - Data processing and preparation (e.g., exclusion criteria, aggregation levels) - Analysis framework (e.g., statistical vs. cognitive model, frequentist vs. Bayes) - Statistical analysis (e.g., testing vs. estimation, fixed vs. random effects, pooling) -- - Combination of decisions spans **multiverse** of data and results (Steegen, Tuerlinckx, Gelman, & Vanpaemel, 2016): - Usually one path through multiverse (*or* 'garden of forking paths', Gelman & Loken, 2013) is reported. - Valid conclusions cannot be contingent on arbitrary decisions. -- - **Multiverse analysis** attempts exploration of possible results space: - Reduces problem of selective reporting by making fragility or robustness of results transparent. - Conclusions arising from many paths are more credible than conclusions arising from few paths. - Helps identification of most consequential choices. -- - **Limits of multiverse approach:** - Implementation not trivial. - Results must be commensurable across multiverse (e.g., estimation versus hypothesis testing). --- class: small, inline-grey ### Current Project - DFG 'Scientific Network' grant to Julia Groß and Beatrice Kuhlmann - 6 meetings over 3 years with 15 people plus external experts - "Hierarchical MPT Modeling – Methodological Comparisons and Application Guidelines" - Multinomial processing tree (MPT) models: class of discrete-state cognitive models for multinomial data (Riefer & Batchelder, 1988) - MPT models traditionally analyzed with frequentist methods (i.e., `\(\chi^2/G^2\)`) and aggregated data - Several hierarchical-Bayesian approaches exist. Do we need those? -- ### Our Multiverse - Statistical framework: - Frequentist (i.e., maximum-likelihood) - Bayesian (i.e., MCMC) - Pooling: - Complete pooling (aggregated data) - No pooling (individual-level data) - Partial pooling (hierarchical-modeling): Individual-level parameters with group-level distribution - Results: 1. Parameter point estimates: MLE and posterior mean 2. Parameter uncertainty: ML-SE and MCMC-SE 3. Model adequacy: `\(G^2\)` `\(p\)`-value and posterior predictive `\(p\)`-value (Klauer, 2010) --- class: inline-grey ## Our Multiverse Pipeline - Developed (with Daniel Heck) `R` script which performs MPT multiverse analysis: - Requires participant-wise response frequencies and MPT model file (`eqn` syntax) 1. Traditional approach: Frequentist asymptotic complete pooling 2. Frequentist asymptotic no pooling 3. Frequentist no-pooling with parametric bootstrap 4. Bayesian complete pooling 5. Bayesian no pooling 6. Bayesian partial pooling I: Beta-MPT (Smith & Batchelder, 2010) 6. Bayesian partial pooling II: Latent trait MPT (Klauer, 2010) 6. Bayesian partial pooling III: Latent trait MPT w/o correlation parameters - Frequentist approaches use `MPTinR` (Singmann & Kellen, 2013) - Bayesian approaches use `TreeBUGS` (Heck, Arnold, & Arnold, 2018), which uses `Jags` -- - Currently developing (with Marius Barth) proper `R` package, `MPTmultiverse`: https://github.com/mpt-network/MPTmultiverse - Some new features and better usability --- class: small ### Recognition Memory Task with Confidence Rating  --- class: small ### 2-high threshold model (2HTM) for 6-point confidence-rating data (e.g., Bröder, et al., 2013) .pull-left2[  ] - `\(r1 = r_1\)` - `\(r2 = (1- r_1)r_2\)` - `\(r3 = (1- r_1)(1 - r_2)\)` - same for `\(q\)` <br/><br/> - `\(r6 = r_6\)` - `\(r5 = (1- r_6)r_5\)` - `\(r4 = (1- r_6)(1 - r_5)\)` - same for `\(q\)` <br/><br/> - For `\(r\)`, `\(r_1\)` and `\(r_6\)` expected to have most data - For `\(q\)`, `\(q_5\)` and `\(q_2\)` expected to have most data <br><br> - Data provides 10 independent data points: - 3 core parameters: `\(Dn\)`, `\(Do\)`, and `\(g\)`. - 8 response mapping parameters `\((r_1\)`, `\(q_1\)`, ...) - For identifiability: `\(r_2 = r_5\)` (lowest two confidence response mapping of detection states equated) --- ## 6-point ROC Data Corpus from Klauer & Kellen (2015): 12 Data Sets <br/> .pull-left2[ | Data set |Sample | Mean No of Trials | |:-------------|:-------------:|:-----:| |Dube & Rotello (2012, E1, Pictures (P))| 27 | 400 | |Dube & Rotello (2012, E1, Words (W))| 22 | 400 | |Heathcote et al. (2006, Exp. 1) | 16 | 560 | |Heathcote et al. (2006, Exp. 2) | 23 | 560| |Jaeger et al. (2012, Exp. 1, no cue)|63|120| |Jang et al. (2009)|33|140| |Koen & Yonelinas (2010, pure study)|32|320| |Koen & Yonelinas (2011)|20|600| |Koen et al. (2013, Exp. 2, full attention)|48|200| |Koen et al. (2013, Exp. 4, immediate test)|48|300| |Pratte et al. (2010)|97|480| |Smith & Duncan (2004, Exp. 2)|30|140| ] .pull-right2[ - Total N = 459 - Mean trials = 350 3. Model adequacy: `\(G^2\)` `\(p\)`-value and posterior predictive `\(p\)`-value (Klauer, 2010) 1. Parameter point estimates: MLE and posterior mean 2. Parameter uncertainty: ML-SE and MCMC-SE ] --- class: small, center <!-- --> --- class: small .left-pull2[ <!-- --> ] Mean concordance correlation coefficient (CCC) ``` ## # A tibble: 3 x 3 ## parameter mean digits ## <fct> <chr> <dbl> ## 1 Dn 0.9149 2 ## 2 Do 0.9631 2 ## 3 g 0.8459 2 ``` ``` ## # A tibble: 8 x 3 ## cond_y mean digits ## <fct> <chr> <dbl> ## 1 Comp asy 0.9668 2 ## 2 Comp Bayes 0.9685 2 ## 3 No asy 0.9557 2 ## 4 No PB 0.956 2 ## 5 No Bayes 0.9598 2 ## 6 Beta PP 0.9738 2 ## 7 Trait PP 0.957 2 ## 8 Trait_u PP 0.9116 2 ``` --- <!-- --> ``` ## # A tibble: 7 x 3 ## parameter mean digits ## <fct> <chr> <dbl> ## 1 q_1 0.8788 2 ## 2 q_2 0.8948 2 ## 3 q_5 0.9855 2 ## 4 q_6 0.9429 2 ## 5 r_1 0.731 2 ## 6 r_2 0.6975 2 ## 7 r_6 0.8887 2 ``` ``` ## # A tibble: 8 x 3 ## cond_y mean digits ## <fct> <chr> <dbl> ## 1 Comp asy 0.9811 2 ## 2 Comp Bayes 0.9826 2 ## 3 No asy 0.9827 2 ## 4 No PB 0.9824 2 ## 5 No Bayes 0.9674 2 ## 6 Beta PP 0.9902 2 ## 7 Trait PP 0.9731 2 ## 8 Trait_u PP 0.969 2 ``` --- <!-- --> --- <!-- --> --- <!-- --> --- class: inline-grey # Summary and Conclusion - Multiverse analysis of 6-point ROCs with 2HTM: 1. General agreement in terms of model evaluation: 2HTM provides adequate account; exception are no pooling methods. 2. Surprisingly strong agreement in terms of point estimates 3. Complete pooling (i.e., aggregated data) appears to provide overconfident SEs (rarely too large SEs). Size of overconfidence depends strongly on parameter. -- - **Note**: Results do not suggest that hierarchical models are not necessary. - **Aggregation artifacts can occur**: - Learning & practice (e.g., Estes, 1956, Psych. Bull.; Evans, Brown, Mewhort, & Heathcote, in press, Psych. Review) - Confidence-rating ROCs in signal-detection theory (Trippas, Kellen, Singmann, et al., in press, PB&R) - Whether or not aggregation artifacts occur, depends on task, model, and parameter. - Multiverse analysis (at least with a selection) appears generally advisable. -- - Future: Variety of different models (e.g., source-memory, prospective memory, IAT, process dissociation, hindsight bias) - `MPTmultiverse` provides `R` package for Bayesian and frequentist multiverse analysis of MPTs: https://github.com/mpt-network/MPTmultiverse --- count: false class: small ### References - Barchard, K. A. (2012). Examining the reliability of interval level data using root mean square differences and concordance correlation coefficients. *Psychological Methods*, 17(2), 294-308. https://doi.org/10.1037/a0023351 - Bröder, A., Kellen, D., Schütz, J., & Rohrmeier, C. (2013). Validating a two-high-threshold measurement model for confidence rating data in recognition. *Memory*, 21(8), 916-944. https://doi.org/10.1080/09658211.2013.767348 - Dube, C., Starns, J. J., Rotello, C. M., & Ratcliff, R. (2012). Beyond ROC curvature: Strength effects and response time data support continuous-evidence models of recognition memory. *Journal of Memory and Language*, 67(3), 389-406. https://doi.org/10.1016/j.jml.2012.06.002 - Jaeger, A., Cox, J. C., & Dobbins, I. G. (2012). Recognition confidence under violated and confirmed memory expectations. *Journal of Experimental Psychology: General*, 141(2), 282-301. https://doi.org/10.1037/a0025687 - Koen, J. D., Aly, M., Wang, W.-C., & Yonelinas, A. P. (2013). Examining the causes of memory strength variability: Recollection, attention failure, or encoding variability? *Journal of Experimental Psychology: Learning, Memory, and Cognition*, 39(6), 1726-1741. https://doi.org/10.1037/a0033671 - Steegen, S., Tuerlinckx, F., Gelman, A., & Vanpaemel, W. (2016). Increasing Transparency Through a Multiverse Analysis. *Perspectives on Psychological Science*, 11(5), 702-712. https://doi.org/10.1177/1745691616658637 --- count: false <!-- --> --- count: false <!-- --> --- count: false <!-- -->